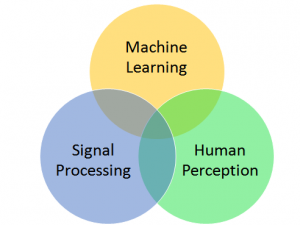

The Multimodal Perception lab focuses on human-centered sensing and multimodal signal processing methods to observe, measure, and model human behavior. These methods are used in applications that facilitate behavioral training, and enable human-agent interactions (HRI). The focus is mainly on vision and audio modalities. Deep Neural networks form the backbone of the underlying formalism. Some specialities of the lab are Multimodal Skill Assessment, Multimodal Conversational Agents, Indian Sign Language Synthesis.

The Multimodal Perception lab focuses on human-centered sensing and multimodal signal processing methods to observe, measure, and model human behavior. These methods are used in applications that facilitate behavioral training, and enable human-agent interactions (HRI). The focus is mainly on vision and audio modalities. Deep Neural networks form the backbone of the underlying formalism. Some specialities of the lab are Multimodal Skill Assessment, Multimodal Conversational Agents, Indian Sign Language Synthesis.

News: MULTIPLE Research Assistant/Associate positions available, please email jdinesh at iiitb dot ac dot in

News: Congrats to Anirban Mukherjee, journal paper accepted in Information Fusion (impact factor – 18.6) !!

News: ICMI 2022, CODS COMAD 2024 successfully organized in Bangalore!!

MPL Lab Academic Reco Policy: [PLEASE DO NOT ASK FOR COURSE/PE RECOs]

MPL Masters Thesis => PhD reco

Road map Thesis:

MTech: VR(2 nd Sem), AVR+PE/RE(3 rd Sem), Thesis

iMTech: VR(6 th Sem), AVR+PE/RE(7 rd Sem), PE/RE(8th Sem), Thesis prep(9th Sem), Thesis